Data Store

Seq is driven by its own log-specific data store.

"Hot" log data is retained and queried through an in-memory layer called the cache, and a time-indexed on-disk layer called the archive provides access to historical information that does not fit into RAM.

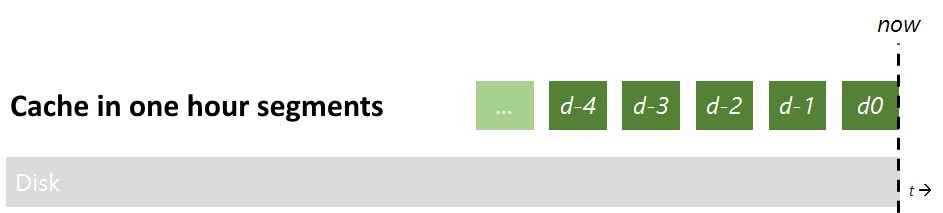

The Cache

Log data has a very specific access pattern: recent events are almost always more interesting than historical ones. Seq makes use of this to optimize the way machine resources are used.

The cache is a time-ordered list of segments. Each segment is a time slice – the duration of each slice is currently set at one hour.

As events arrive and RAM fills up, segments are dropped from the end of the list, preserving more recent events.

It’s a very simple strategy, but an effective one – response times on queries improve dramatically when all data is in cache, and most queries of interest are filled by recent events.

Query performance

Queries are normally serviced from the cache. When log data exceeds the size of available RAM, older events will be brought into memory from the archive for querying. While querying the archive is a useful way to view historical data, disk-based queries are significantly slower than in-memory queries, so deployments of Seq normally need to be sized so that the RAM capacity matches the volume of data required for day-to-day monitoring and diagnostics - usually at least 7, 14 or 30 days.

Effective use of retention policies to "thin out" older log data can keep longer periods of data in RAM.

The diagnostics page in Seq provides some information on the relative size of the cache vs. the on-disk archive.

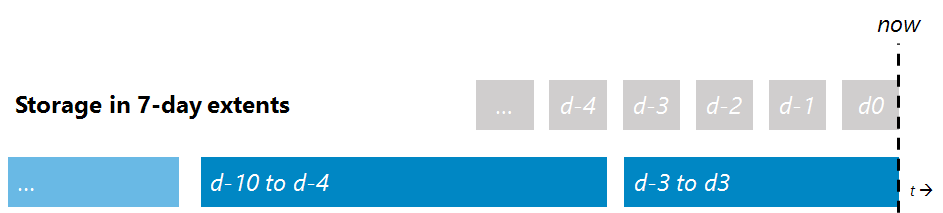

The Archive

Data on-disk is stored 7-day blocks called extents. These are stored in the storage root path in a folder called "Extents".

The extents are completely self-contained, meaning the individual folders can be deleted, moved or backed-up independently.

Each extent is an individual time-indexed span of compressed event data. The data files are managed using Flare, an event-oriented native storage engine.

Retention processing and compaction

Seq will periodically process retention policies and remove old data. Space is only freed when enough data is eligible for processing, so events may live longer than the time specified on retention policies that apply to them.

When, finally, an extent contains no data, the complete extent file will be removed.

The lifecycle of an event on disk

Seq uses an ingestion scheme that's optimized for the common case where events are ingested shortly after they're emitted and are deleted after some period of time.

Events in Seq are initially written into disk-backed buffers called ingest buffers. Each ingest buffer covers a fixed window of time called the tick interval and stores its events unsorted. Its priority is to ensure events are durably persisted as quickly as possible.

A note on consistency

A batch of events ingested in a single tick interval will be consistent; either they'll all be successfully written or none of them will be.

A batch of events ingested over multiple intervals are not guaranteed to be consistent; some of the events in a single 5-minute window may be successfully ingested while events in another 5-minute window may fail.

Data in ingest buffers is expected to be volatile; new events might need to be written while applications are still likely to emit them in the ingest buffer's tick interval. Over time, the likelihood that events will need to be written into an ingest buffer decreases. Once that likelihood is low enough the data in that tick interval will become available for reprocessing into a format that's optimized for querying off disk. A healthy server will be able to reprocess events before they're dropped out of the cache.

Query-optimized events are copied from ingest buffers into stable spans. Stable spans are large ordered, indexed, immutable files that can be efficiently queried from disk. For events in stable spans, Seq uses Signals for a page-based indexing scheme to minimize disk I/O during queries. These indexes are created for each signal in the background when retention processing is not running.

Updated 5 months ago