Indexing

Signal Indexes reduce the volume of data from disk that queries must search. The more signal indexes that are active to narrow down a search, the less data Seq needs to search.

To take advantage of indexing, one or more signals should be selected before issuing a search or query, and should be used to select source data when creating dashboards or alerts.

Signals are most effective when they select a small proportion of the log. The benefit provided by an index will also be influenced by the size of the events being searched, and the overlap between signals.

How do Signal Indexes work?

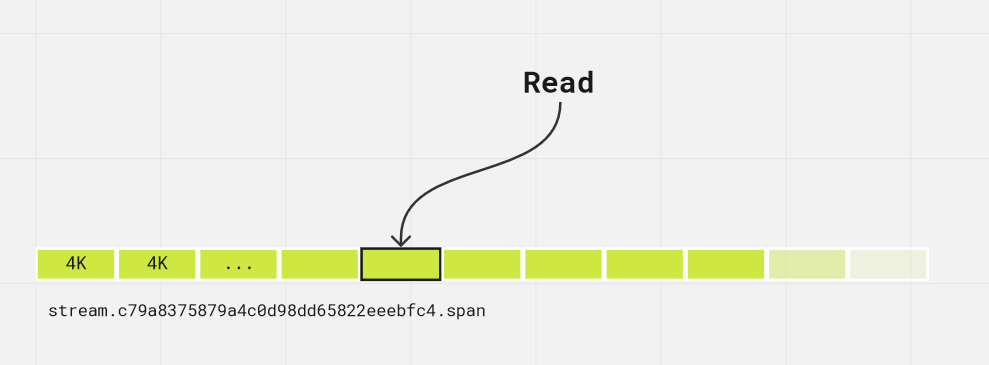

Each file in the log stream managed by Seq is naturally partitioned into pages by the host operating system and virtual memory manager:

File divided into 4 KiB pages. Read operations work in page-sized units.

When Seq reads events from storage, it must do so in page-sized chunks, as these are the units in which the OS and virtual memory manager work.

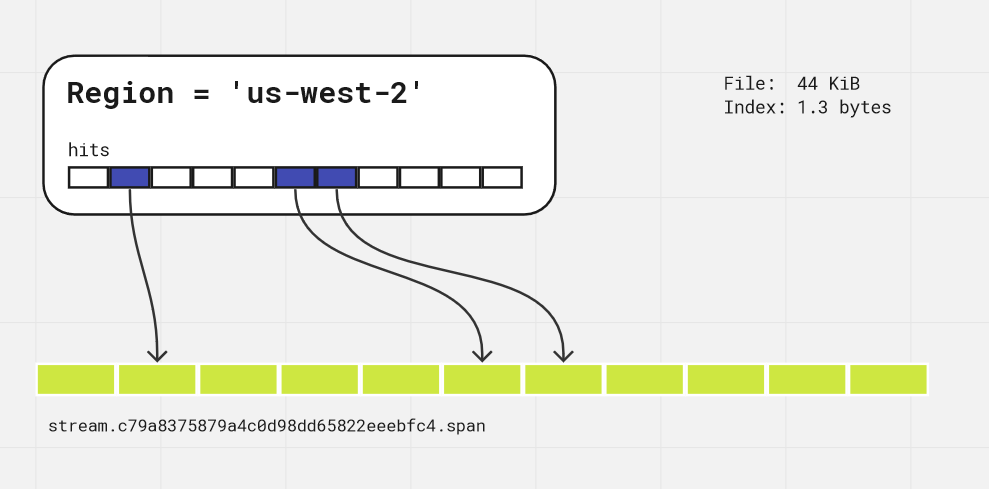

A signal index is a bitmap index, where each page in the log stream corresponds to a single bit in the index. If the page contains events that match the signal, then the corresponding bit is "on", and otherwise, it's off.

When Seq searches within a signal, it only needs to retrieve pages that may contain events, as marked in the index, so the total amount of I/O required is drastically reduced:

Signal matching events in a particular region. The "on" bits in the signal index correspond to pages in the log stream that contain matching events.

As signals are selected in the Seq Events screen, their bitmap indexes are combined using bitwise operations, so that the combination of the Errors signal and the one shown above will further restrict the search space to only pages that contain matches for both signals.

Signal indexes are extremely space-efficient; the size of the index is approximately 0.003% of the size of the indexed log data, making them much easier to maintain than alternatives which frequently exceed the size of the indexed data set.

Indexing considerations

- Indexing won't be applied to any data written in the last hour: this prevents churn caused by events arriving slightly late, or when the originating application is on a machine with clock drift.

- Indexing also doesn't trigger until at least 160 MB of contiguous data are available to be indexed.

- When signals change, recent data is reindexed first: this means that if, say, an automated process (or users) modify signals constantly, then some of the old data may be left with stale indexes (this won't impact correctness, only performance).

- Indexing competes with retention policy processing time; so, if retention policies are running efficiently, the time remaining will be used for indexing; if retention policies are running at capacity (this would be indicated by single-digit "headway" numbers in the logs) then indexing won't trigger.

- Historical writes, for example importing a days' worth of logs with timestamps one week ago, won't be indexed unless retention processing compacts them into the indexed log stream (they will be searchable, but indexes will not boost performance over the imported range).

Updated 5 months ago