Setting up a Disaster Recovery (DR) Instance

Setting up a second Seq instance ensures all data is retained, and events can continue to be ingested, if the first Seq instance is lost or needs to be taken offline for maintenance.

Disaster Recovery is not supported by all Seq subscription types. Please refer to the subscription tier details on datalust.co and contact us if you need any help with licensing.

Overview

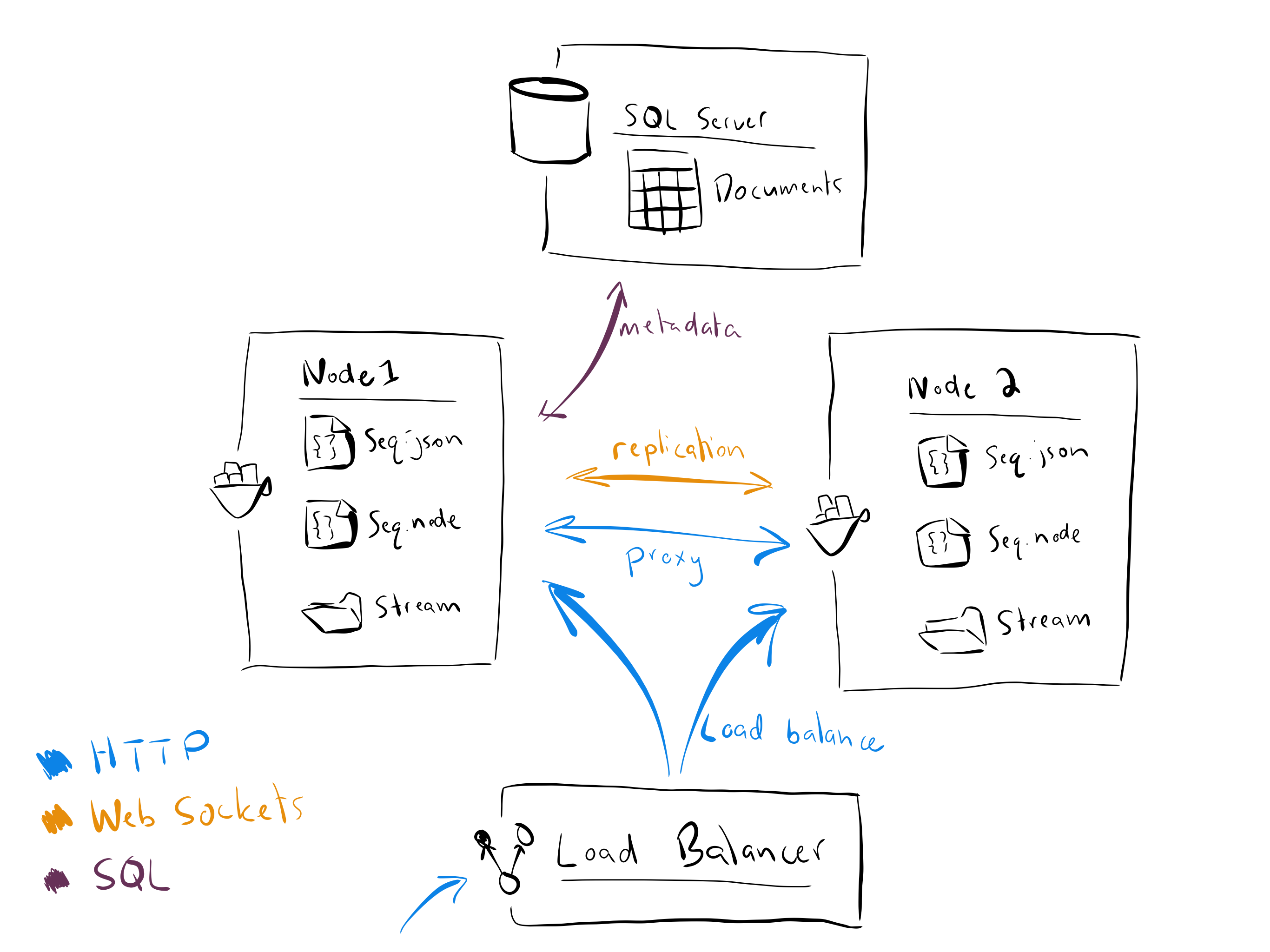

Seq's DR configuration relies on two Seq instances ("cluster nodes") behind an HTTP load balancer.

Each node in the cluster uses a local storage volume to maintain a complete copy of the event data held in the cluster.

Nodes share a single highly-available database - either Microsoft SQL Server, Azure SQL Database, or PostgreSQL - to store metadata including users, signals, dashboards, and so on.

A single node is designated "leader", and handles ingestion and API requests. The other node acts in the "follower" role, and continually synchronizes its local log store with changes made on the leader. Replication to the follower node is asynchronous.

The load balancer may route traffic to either or both nodes: when requests arrive at the follower node, they are internally proxied to the leader. This avoids the need to reconfigure the load balancer when the leader role is passed between nodes, and when nodes are temporarily taken offline.

An overview of the major elements of Seq in a two-node DR configuration.

A two-node Seq cluster supports the following additional scenarios that cannot be reliably implemented using a single Seq instance:

- Disaster Recovery (DR) — when one of the two nodes fails, it can be replaced without losing already-ingested data, or system configuration. If the failing node is the leader, manual intervention is required for disaster recovery, so ingestion and queries will be unavailable until fail-over is performed. Failure of the follower node will not prevent ingestion.

- Zero-downtime Maintenance and Upgrades — when maintenance needs to be performed, the leader node can be gracefully failed-over to bring it offline, while the follower takes over as the new leader node. This process can be used to perform Seq, system, and hardware maintenance and upgrades without significant interruption of ingestion or queries.

Clustering roadmap

DR is the first of three phases in the development of Seq's multi-node features. Each phase will be largely compatible with those before it, so over time, additional capabilities can be added to a Seq cluster.

The additional capabilities are:

- High Availability (HA) — in this configuration, a cluster of three or more nodes will perform automatic leader election, so that manual fail-over is not required in order to continue ingestion and API availability when one or more of the cluster nodes fail.

- Scale-out — in this configuration, queries issued in an HA cluster will make use of multiple machines to increase performance/reduce execution time.

This won't be the end of Seq's clustering story, with many more opportunities to pursue, however we're focusing our current development efforts primarily on these two capabilities in the near term.

Requirements

The System Requirements and Deployment Checklist should be reviewed along with the DR-specific items below.

Licensing

Disaster Recovery is not supported by all Seq subscription types. Please refer to the subscription tier descriptions on datalust.co and contact us if you need any help with licensing.

Hardware

Having two separate machines to deploy Seq onto is the most useful setup for DR and zero-downtime upgrades.

The machines don't need to be identical, as long as they can both handle being the main "leader" node serving traffic and queries. Keeping both machines at a useful level of capability will provide the best experience. The standard System Requirements apply to each node.

Because queries are served by the leader node, and it's selected manually, it's possible to have one larger machine for normal operations, and a smaller follower node for DR and to handle ingestion during upgrades, if this helps reduce costs.

The machines both need the same kind of local storage as would be used in a single-node configuration.

Network and firewall

It's best to have a fast network between the machines, though cross-datacenter replication should be possible with sufficient bandwidth.

The nodes will communicate with each other via two open ports:

- An internal API port used for proxied HTTP requests, which can be the same port (80 or 443) that's serving regular API traffic, and

- A cluster port; our examples use 5344; this is used for WebSocket connections between the nodes.

Database

A recent Microsoft SQL Server/Azure SQL Database, or PostgreSQL server, is required for metadata storage.

An initial, empty database should be created for Seq, and a user account provisioned with appropriate permissions to manage both schema and data within the database.

Seq will create schemas, tables, and other database objects on first run.

Load balancer

A load balancer in front of the two instances will ensure that API access and ingestion can continue while one of the instances is offline.

The load balancer should check /health on the regular HTTP API endpoints of the two nodes (note that /health is at the root, it's not /api/health), and take a node out of rotation if GET /health returns a status code other than 200.

It doesn't matter how the load balancer routes traffic - the follower node acts as a web proxy to the leader node, so requests arriving at one node will be internally routed to the other via its API port.

Using round-robin or similar routing will keep each node hot, and may improve the chances of detecting problems prior to attempted fail-over, so this is the recommended default.

Security considerations

Intra-cluster traffic

Communication between nodes is via HTTP and WebSockets. If the two machines are isolated, it's possible to run them without TLS, but if you want to use HTTPS and WSS, then you'll need SSL certificates in password-protected PFX format for the DNS names through which the machines will see each other.

It's not necessary for the internal hostnames to be the same as the public Seq hostname; e.g. Seq might be https://seq.example.com and internally the HTTP API endpoint used for intra-cluster traffic can be https://seq.example.local.

On Windows, the internal HTTP API endpoint will need SSL applied using the normal bind-ssl command used for Seq's other HTTP endpoints.

For cluster traffic on Windows, and for both cluster and internal API traffic on Linux, TLS is applied by specifying https:// or wss:// for the endpoint addresses, and including <hostname>-<port>.pfx or <port>.pfx files under the Certificates/ folder in the Seq storage root (this may need to be created if it does not already exist).

The password for the PFX file(s) needs to be configured using:

seq secret set -k certificates.defaultPassword -v <password>

Docker CLI commands

Commands like

seq secret setcan be run using the Docker container with storage volume attached, usingdocker run [...] datalust/seq secret set [args], or using init scripts in the container by invoking theseqsvrcommand.

Don't configure the certificate password until the

storage.secretKeysetting has been applied to each node (see "Getting started", below).

Cluster authentication key

The leader node authenticates cluster traffic from follower nodes using a single fixed key, which is set up as part of node configuration. The key is an arbitrary string of characters that must be given the same value on each node.

Secret storage

Because clustered Seq configurations need to locally store sensitive information such as connection strings, on Linux we recommend keeping the secret key in the SEQ_STORAGE_SECRETKEY environment variable, instead of in Seq.json.

On Windows, the value in Seq.json will be encrypted with machine-scope DPAPI. Access to the Seq.json file and root storage folder should be restricted to the user account that the Seq service runs under (or administrators, if running as Local System).

Getting started

The first step to setting up Seq in a two-node, DR configuration, is to configure the initial leader node. The follower node is configured next and will synchronize itself with the leader's state.

It's possible to work through these instructions yourself to set things up, but we're also glad to help, if you'd rather deploy it in collaboration with one of the engineers from the Seq team. Just drop us a line via

[email protected], and we'll make sure the process is smooth and hassle-free!

If you have an existing Seq instance...

The first step when adding DR to an existing Seq instance is to migrate its metadata store to the database that will be shared between cluster nodes.

This is done using seq metastore to-mssql or seq metastore to-postgresql. Both commands have very similar syntax; the to-mssql version is shown below:

seq metastore to-mssql --connection-string="<connection string here>"

If you're using Docker, then substitute the

seqcommand fordocker run -it -v (volume mapping) datalust/seq.Inside the Docker container, for example when running init scripts, substitute

seqwithseqsvr.

The connection string will be encrypted with the Seq instance's secret key, and stored in the Seq filesystem root under Secrets/.

Later, to set up the follower node, you'll need the secret key from the existing node. If you don't have it in external storage already, you should retrieve it now using:

seq show-key

If you're starting with a clean server

To set up a brand new node as leader, install Seq (if on Windows), but don't install or start the Seq service.

Instead, generate a new secret key using:

seq show-key --generate

Then create a new configuration and initialize it with the secret key:

seq config create

seq config set -k storage.secretKey -v "<secret key>"

Configuring the leader

The first thing to do is to set up the connection to the shared database:

seq secret set -k metastore.msSql.connectionString -v "<connection string>"

So that inbound links generated for redirects and in alert notifications is correct, you need to set api.canonicalUri to the address of the load balancer.

seq config set -k api.canonicalUri -v "<address>"

Setting a canonical URI

It's important to specify a canonical URI when configuring a Seq cluster, so that each node can generate correct external URIs.

Next, configure the cluster network and authentication key (PowerShell syntax shown):

seq node setup `

--cluster-listen ws://localhost:5344 `

--internal-api http://localhost `

--peer-cluster ws://seq002.example.local:5344 `

--peer-internal-api http://seq002.example.local `

-k "<cluster authentication key>" `

--node-name seq001.example.local

| Option | Description |

|---|---|

cluster-listen | The URI on which to listen for intra-cluster messages. |

internal-api | The URI on which to listen for Seq API requests. If you're using a different port for internal API traffic, you'll need to add its scheme://hostname:port combination to the api.listenUris setting, too. |

peer-cluster | The URI on which the other server is listening for intra-cluster messages. |

peer-internal-api | The URI on which the other server is listening for Seq API requests. |

k | The cluster authentication key. The authentication key functions as an API key that cluster nodes use to authenticate each other; all nodes in the cluster should share the same authentication key. Obtain a strongly random value for this using a password generator. |

node-name | The --node-name value is informational, and can be any string useful for identifying the individual node. |

Finally, configure the node as leader, and start it:

seq node lead

seq service start

You should now be able to browse Seq at its regular address, and via the load balancer.

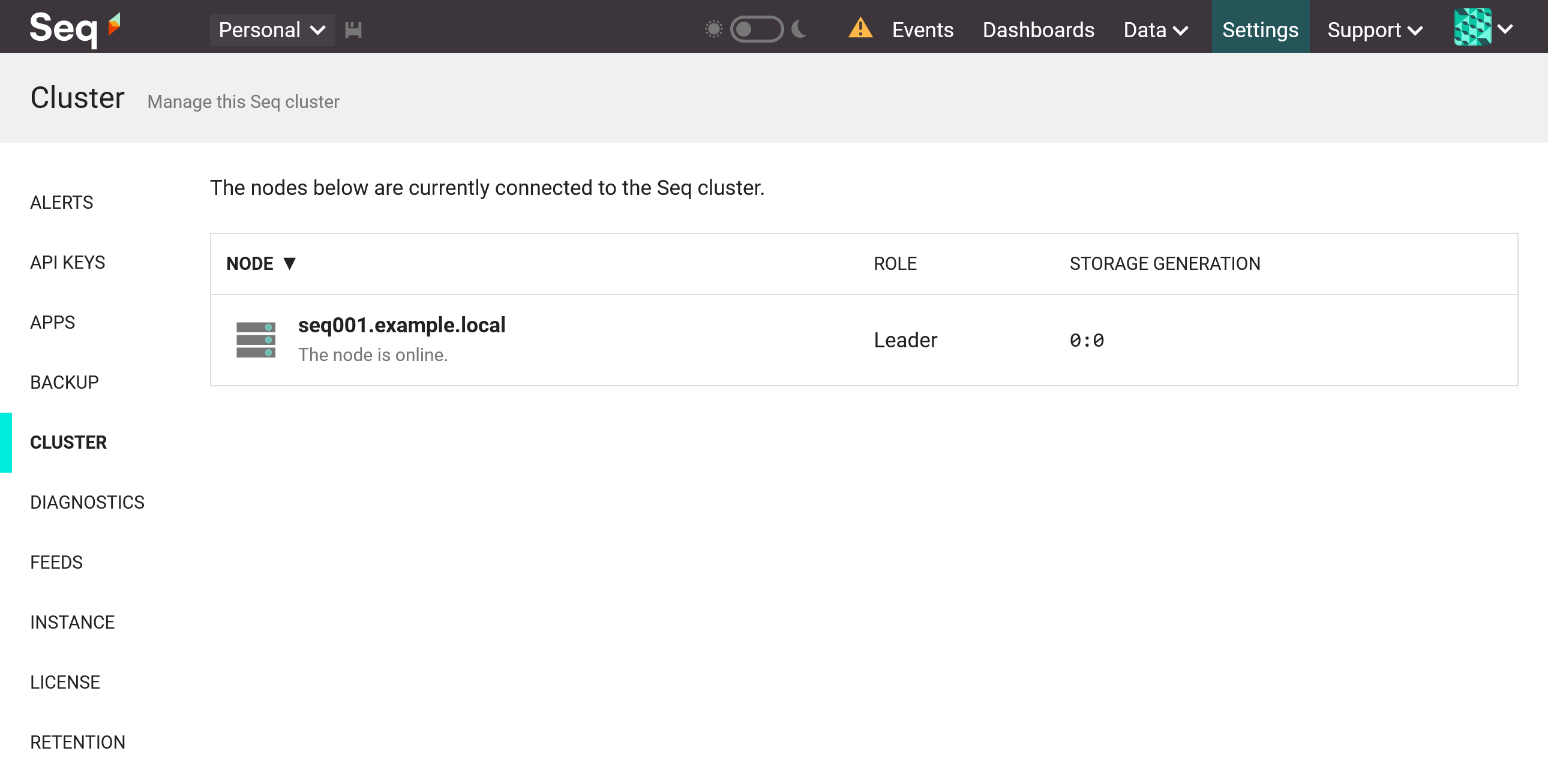

Under Settings, you should see a new Cluster item. The Cluster screen will show only one node - the one you're browsing - and you'll also see a message in the notification area warning you that there's no up-to-date follower.

Seq Cluster screen with leader only.

Configuring the follower

Configuring the follower node is almost identical to configuring the leader node; it's important to note:

- The

storage.secretKeyand cluster authentication key values must be the same as those used by the leader, - The peer URIs configured with

seq node setupmust point to the leader node, and - The

seq node leadcommand is not executed on the follower.

The full configuration process (PowerShell syntax) looks like:

seq config create

seq config set -k storage.secretKey -v "<secret key>"

seq secret set -k metastore.msSql.connectionString -v "<connection string>"

seq config set -k api.canonicalUri -v "<address>"

seq node setup

--cluster-listen ws://localhost:5344 `

--internal-api http://localhost `

--peer-cluster ws://seq001.example.local:5344 `

--peer-internal-api http://seq001.example.local `

-k "<cluster authentication key>" `

--node-name seq002.example.local

seq service start

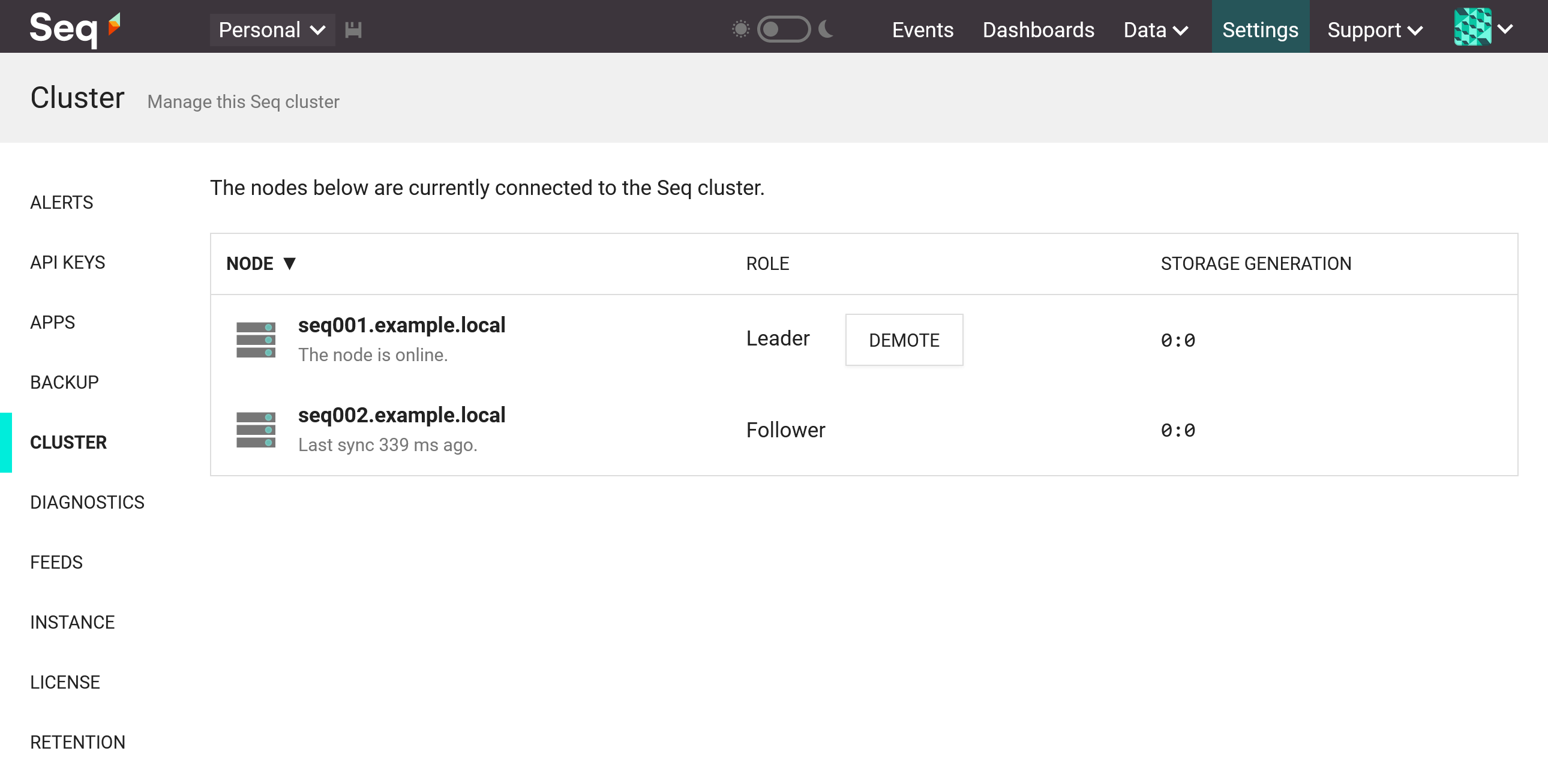

When the follower has started, it will appear in the Cluster screen:

The Seq cluster screen with follower connected.

It's time to start ingesting some events!

Make sure client applications are configured to log via the load balancer, not the direct ingestion endpoints on the Seq nodes.

Node and cluster health

Probing for node health

To check the state of a node, browse to it directly: if it's successfully serving or proxying requests, you'll see the Seq user interface.

Because all HTTP requests that reach the follower are proxied to the server, an unhealthy follower that can still reach the leader can appear healthy. To work around this, a special /health endpoint must be used to check the health of the follower node itself. Making a GET request to the root /health endpoint will result in 200 if the follower itself is healthy, and 5xx otherwise (/health is not proxied between nodes in the cluster).

Last sync time and storage generations

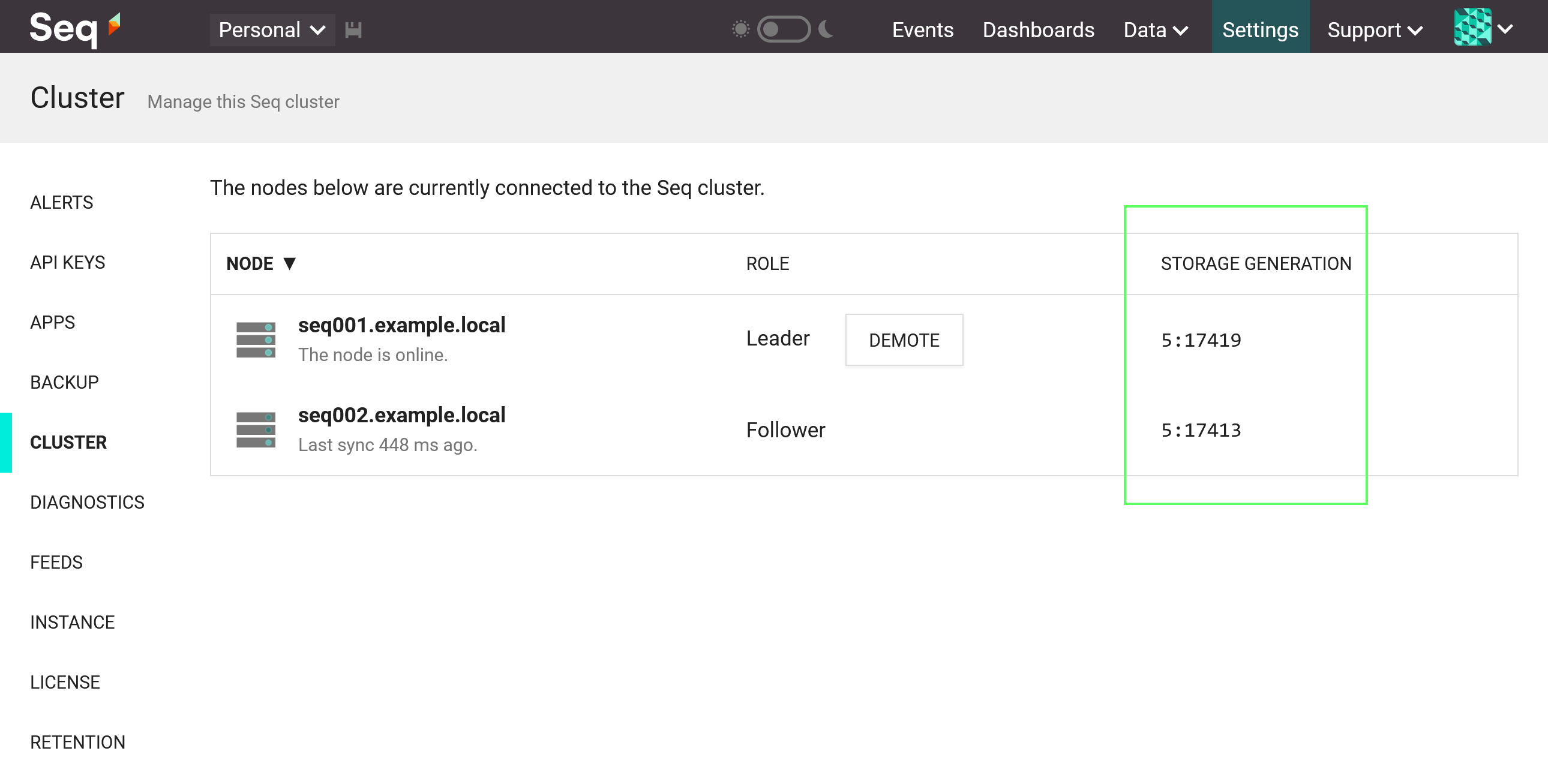

As events reach the cluster through the load balancer, they'll be ingested into the event store. Seq uses generational storage, and as each batch of events is written, the store generation will be incremented.

The Cluster screen shows the generation of the event store held by each node in the storage generation column.

Storage generations shown on the cluster screen.

The storage generation is a two-part number; the right-hand part is the "write" number, corresponding roughly to the number of written batches of events, and the left-hand part (5, above) is the "model" number, corresponding roughly to the number of buffer creation, retention policy application, and indexing operations that have occurred.

For the most part, the storage generation can be treated as an opaque identifier, but it, in combination with the follower node's last sync time, can be used to determine what replication delay currently exists between the nodes.

System administration tasks

When running multiple Seq nodes, in general, one-off administration tasks like restoring from a backup, or resetting authentication from the command-line, should be performed on the leader.

An exception is manipulation of Seq's local configuration using the seq config and seq node commands: the effect of these is local to the node on which they're run.

Updated 6 months ago